Step by step guide to integrate Microsoft 365 Copilot declarative agents with Azure OpenAI

Introduction

In this post, I’ll walk you through how to call Azure OpenAI APIs from a Copilot declarative agent—without writing any code—by using Microsoft 365 Agents Toolkit. Leveraging OpenAPI specifications, Microsoft 365 Agents Toolkit enables seamless integration with Azure OpenAI, streamlining the development process for Copilot extensibility.

Step 1: Create and Import an OpenAPI Specification

Microsoft provides a pre-built OpenAPI specification for Azure OpenAI, but it’s often broader than needed. To simplify, I generated a targeted OpenAPI spec with Copilot by using the endpoint URL and a sample request body as a prompt. This trimmed version was then imported into Microsoft 365 Agents Toolkit as a declarative action.

Importing the OpenAPI Spec

You can import the spec directly into Microsoft 365 Agents Toolkit:

Run the API

Once imported, you can invoke the Azure OpenAI API after provisioning the solution.

Step 2: Sample OpenAPI Specification

Here’s a trimmed-down OpenAPI spec that targets the chat/completions endpoint. It uses placeholders like ${{MODEL}} and ${{API_VERSION}} to dynamically pull values from your .env file:

Sample OpenAPI Spec

openapi: 3.0.4

info:

title: Azure OpenAI API

description: OpenAPI specification for Azure OpenAI services.

version: 1.0.0

servers:

- url: https://rescontentsafe1749361086.openai.azure.com

description: Azure OpenAI service endpoint

paths:

/openai/deployments/{model}/chat/completions:

post:

summary: Generate AI responses based on user input

operationId: getVolunteeringOpportunities

tags:

- OpenAI

description: >-

This endpoint generates a response based on the conversation history

provided in the request body.

parameters:

- name: api-version

in: query

required: true

schema:

type: string

description: API version (e.g., "2025-01-01-preview").

default: "${{API_VERSION}}"

- name: model

in: path

required: true

schema:

type: string

description: Model deployed.

default: "${{MODEL}}"

requestBody:

required: true

content:

application/json:

schema:

type: object

properties:

messages:

type: array

description: Conversation history with system and user messages.

items:

type: object

properties:

role:

type: string

enum: [system, user, assistant]

content:

type: string

temperature:

type: number

description: Sampling temperature between 0 and 2.

default: ${{TEMPERATURE}}

max_tokens:

type: integer

description: Maximum number of tokens to generate.

default: ${{MAX_TOKENS}}

responses:

'200':

description: AI-generated completion response

content:

application/json:

schema:

type: object

properties:

id:

type: string

choices:

type: array

items:

type: object

properties:

text:

type: string

finish_reason:

type: string

usage:

type: object

properties:

prompt_tokens:

type: integer

completion_tokens:

type: integer

total_tokens:

type: integer

security:

- apikey: []

components:

securitySchemes:

apikey:

type: apiKey

in: header

name: api-key

Step 3: Notes on Server URL Templating

While OpenAPI 3.0+ supports server templating:

servers:

- url: https://rescontentsafe1749361086.openai.azure.com//openai/deployments/{model}

description: Azure OpenAI service endpoint

variables:

model:

description: The name of the deployed model.

default: gpt-4o

enum:

- gpt-35-turbo

- gpt-4o

- gpt-4-32k

Caveats

I attempted to template the path to avoid hardcoding values and instead use variables defined in the .env file. Unfortunately, this approach did not work as expected.

This issue is surprising since API server templating is supported, as outlined in API Server and Base Path and Path Templating.

The workaround is to hard code the Server Url property.

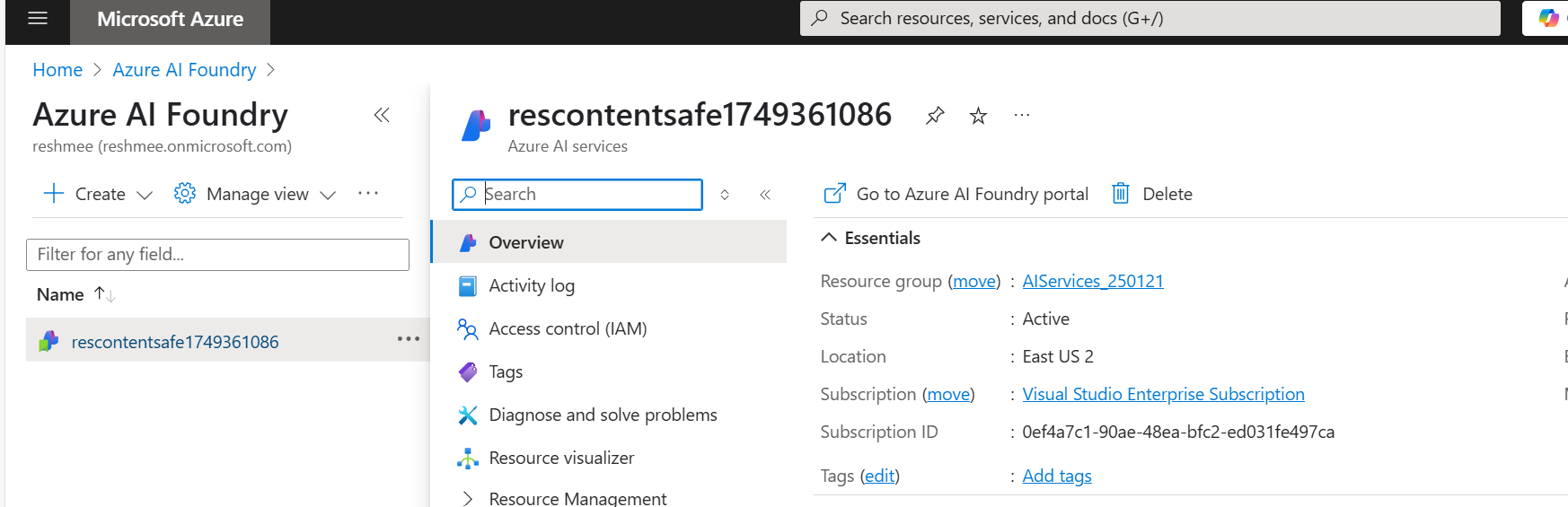

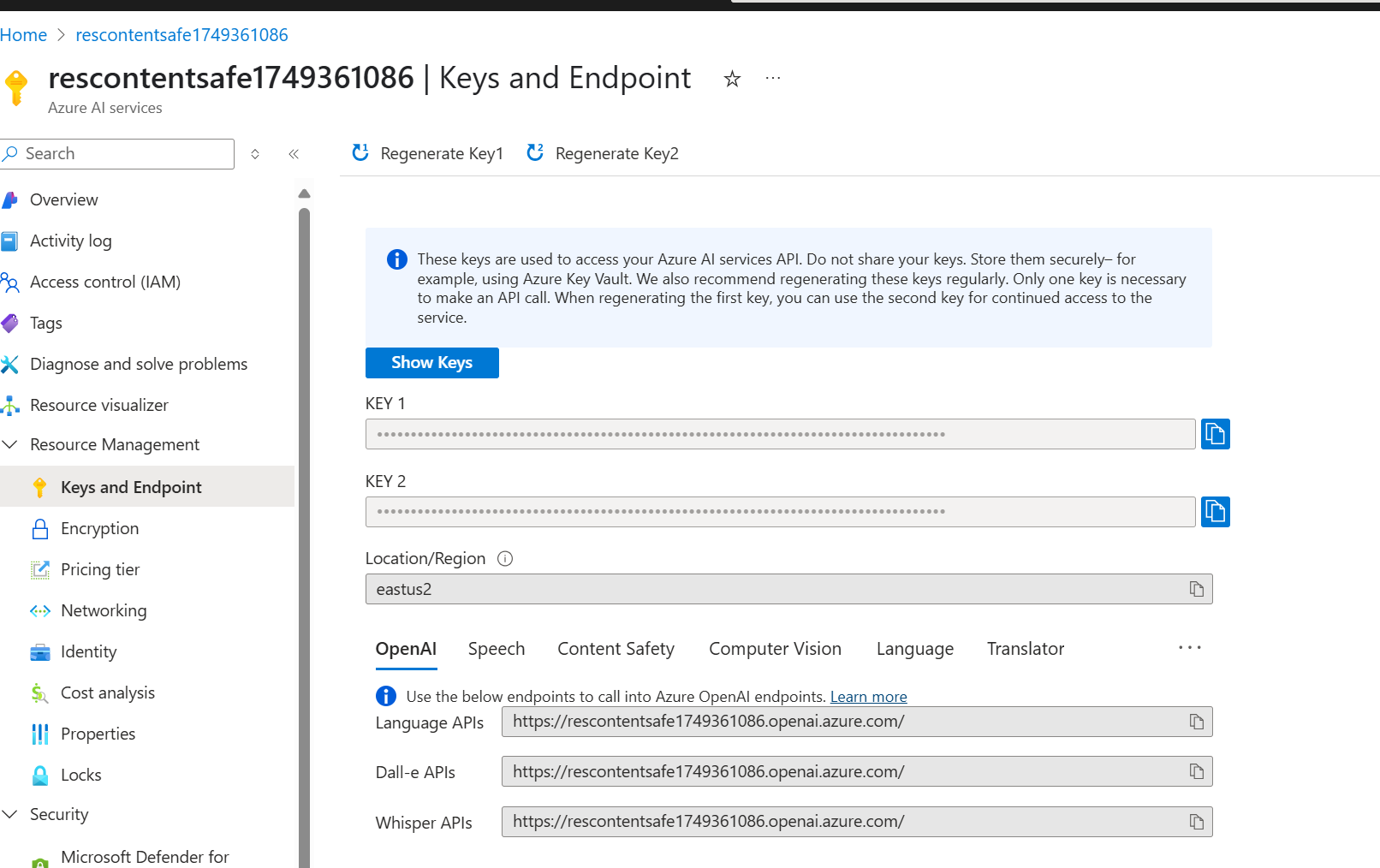

Step 4: Set Up Azure OpenAI

To use the OpenAPI spec, follow these steps: Before invoking the API, you’ll need to:

Create an Azure OpenAI Resource Set up an Azure OpenAI resource in Azure portal.

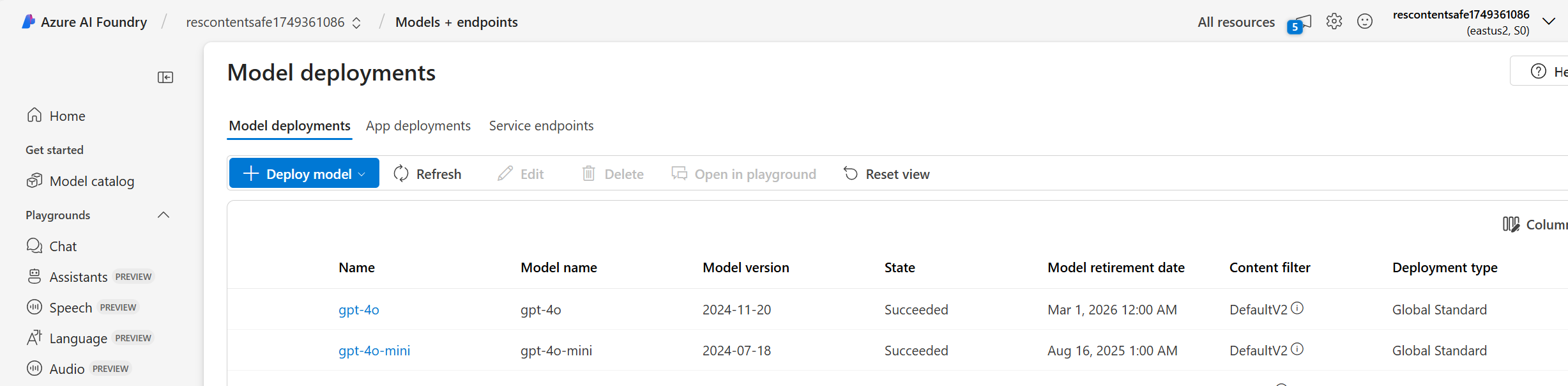

Deploy a Model Deploy a model such as gpt-4o or gpt-35-turbo to your Azure OpenAI resource.

- Copy the Endpoint and Key

Copy the endpoint URL and API key from your Azure OpenAI resource.

Step 5: Configure .env File

Define the following variables in your .env file to configure the OpenAPI spec:

RESOURCE_NAME=rescontentsafe1749361086 #attempt to refer to the resource name within the openapi spec in vain

MODEL=gpt-4o

API_VERSION=2025-01-01-preview

TEMPERATURE=0.7

MAX_TOKENS=800

TOP_P=0.95

FREQUENCY_PENALTY=0

PRESENCE_PENALTY=0

Step 6: Register the API Key in Microsoft 365 Agents Toolkit

- Go to Teams developer portal

- Navigate to Tools > API Key registration with the following information:

- Register your API:

- API key: Add a secret with the Azure AI Services Key you copied in step 4

- API key name: e.g., Azure AI Services Key Name

- Base URL: The Azure AI Services Endpoint URL you copied in step 4

- Target tenant: Home tenant

- Restrict usage by app: Any Teams app (when agent is deployed, use the Teams app ID)

Save the information. A new API key registration ID will be generated. Copy the key.

Step 7: Configure ai-plugin.json

Update the reference_id to the key copied in the step ‘API key registration’ to reference the API key registration.

"runtimes": [

{

"type": "OpenApi",

"auth": {

"type": "ApiKeyPluginVault",

"reference_id": "${{APIKEYAUTH_REGISTRATION_ID}}"

},

"spec": {

"url": "apiSpecificationFile/openapi.yaml"

},

"run_for_functions": [

"getVolunteeringOpportunities"

]

}

]

Step 8: Update OpenAI Spec Server API URL

To ensure the OpenAPI specification correctly references your Azure OpenAI resource, update the server URL in the OpenAPI spec. This step is crucial for aligning the API calls with your deployed model and endpoint configuration.

Replace the placeholder server URL with the actual endpoint URL of your Azure OpenAI resource. For example:

servers:

- url: https://<your-resource-name>.openai.azure.com

description: Azure OpenAI service endpoint

Solution Download

📦 You can download the solution from Call chat completions from Azure Open AI - No code!

Use Cases

It will help integrate Microsoft 365 Copilot declarative agents with capabilities provided by other models available from Azure Open AI.

Other use cases : Azure AI Search

In the Solution I built with Lee Ford Azure AI Search is being called using similar approach.

Conclusion

Using Microsoft 365 Agents Toolkit and OpenAPI specifications, you can easily integrate Azure OpenAI services into your Copilot agent. While some features like path templating may not work as expected, this approach simplifies the process of creating declarative agents with minimal coding.

If you have any questions or suggestions, feel free to leave a comment or reach out!

References

- Copilot

- Declarative Agent

- Azure OpenAI

- Teams Toolkit

- M365 Copilot Extensibility

- OpenAPI

- Authentication

- ttk

- atk

- Microsoft 365 Agents Tooklit